AI-Native UGC Game Engine, Reasoning VLMs, Kyutai TTS, Collective Intelligence for Frontier AI, pay per crawl, String by Pipedream, Qwen VLo, Ovis-U1-3B, Context Engineering and more

AI-Native UGC Game Engine, Reasoning VLMs, Kyutai TTS, Collective Intelligence for Frontier AI, pay per crawl, String by Pipedream, Qwen VLo, Ovis-U1-3B, Context Engineering and more

Hey there! Welcome back to AI Brews - a concise roundup of this week's major developments in AI.

In today’s issue (Issue #106):

AI Pulse: Weekly News at a Glance

Weekly Spotlight: Noteworthy Reads and Open-source Projects

AI Toolbox: Product Picks of the Week

🗞️🗞️ Weekly News at a Glance

Dynamics Lab introduced Mirage, the world's first real-time generative engine enabling live user-generated content (UGC) gameplay through state-of-the-art AI World Models. Built to support dynamic, interactive, and sustained gameplay, it enables entire worlds to be generated and modified live through natural language, keyboard, or controller input. Two playable demos have been released: Urban chaos (GTA-style) and Coastal drift (Forza Horizon-style); both are fully generated on the fly [Details]

Chinese search giant Baidu announced the open source release of the ERNIE 4.5 model family. It consists of Mixture-of-Experts (MoE) models with 47B and 3B active parameters, with the largest model having 424B total parameters, as well as a 0.3B dense model. All models are publicly accessible under Apache 2.0 [Details].

Zhipu AI released a new open-source Vision-Language Model (VLM) GLM-4.1V-9B-Thinking. By introducing a "thinking paradigm" and leveraging reinforcement learning, the model significantly enhances its capabilities. It achieves state-of-the-art performance among 10B-parameter VLMs, matching or even surpassing the 72B-parameter Qwen-2.5-VL-72B on 18 benchmark tasks. It also demonstrates competitive or superior performance compared to closed-source models such as GPT-4o [Details].

Cloudflare introduced pay per crawl in private beta, which will let content creators control access and get paid. With pay per crawl, publishers can block AI crawlers, allow specific ones, charge for access, or grant free access. They’ll have full control over how their content is accessed [Details].

Kyutai Labs released an open-source 1.6B parameter Streaming Text to Speech model, that sets a new state of the art in text-to-speech. Kyutai TTS is the first text-to-speech model that is also streaming in text. You can pipe in text as it's being generated by an LLM and Kyutai TTS will already start processing it, leading to ultra-low latency. Kyutai also open-sourced Unmute, a tool that allows text LLMs to listen and speak by wrapping them in Kyutai's Text-to-speech and Speech-to-text model [Details| Demo].

Alibaba Qwen team:

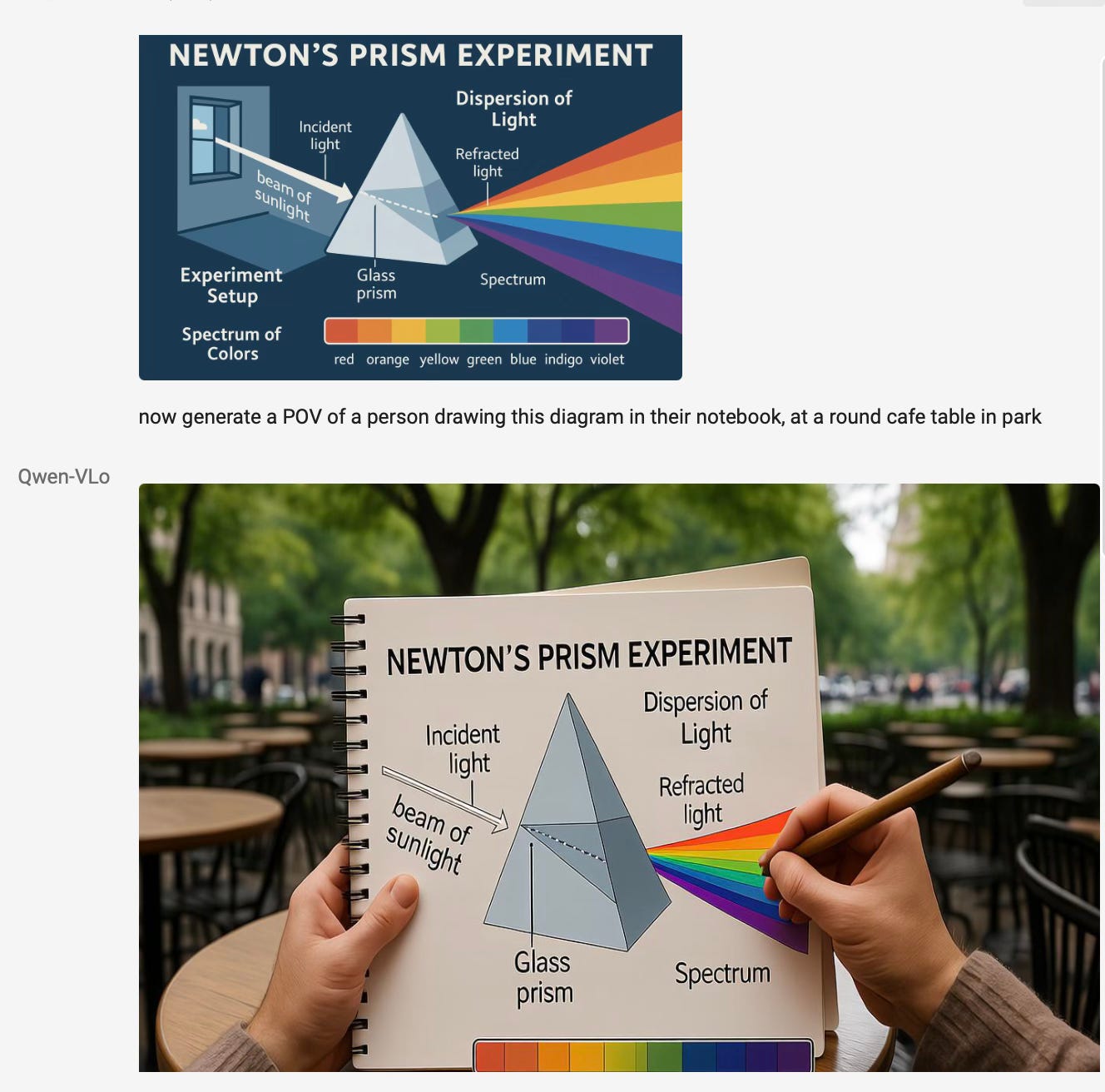

Qwen VLo, a unified multimodal understanding and generation model available via Qwen Chat. Qwen VLo introduces an innovative generative mechanism: a progressive top-to-bottom, left-to-right generation process that not only improves generation efficiency but is particularly suited for tasks requiring fine control, such as generating long paragraphs of text [Details].

Qwen-TTS, a text-to-speech model that supports Chinese-English bilingual synthesis and several Chinese dialects. It aims to produce natural and expressive speech, and is available via API [Details].

Sakana AI developed a new inference-time scaling algorithm, AB-MCTS (Adaptive Branching Monte Carlo Tree Search), that enables AI to effectively perform trial-and-error and allows multiple frontier AI models to cooperate. AB-MCTS combination of o4-mini + Gemini-2.5-Pro + R1-0528, outperformed individual o4-mini, Gemini-2.5-Pro, and DeepSeek-R1-0528 models by a large margin [Details].

Perplexity has rolled out Perplexity Max, a $200/month subscription that provides unlimited Labs usage, early access to Comet AI browser, and access to OpenAI o3-pro and Claude Opus 4 [Details].

FreePik announced unlimited image generation and editing in Premium+ and Pro plans [Details].

LlamaIndex released LlamaIndex Workflows 1.0, a lightweight framework for building complex, multi-step agentic AI applications in Python and Typescript. This release introduced standalone version of Workflows, with its own repository, package, and development path, making it easier to use Workflows outside of the LlamaIndex ecosystem [Details].

AIDC-AI (AI team at Alibaba International Digital Commerce Group) released Ovis-U1-3B, a 3-billion-parameter unified model that integrates multimodal understanding, text-to-image generation, and image editing capabilities .With only 3B parameters, Ovis-U1 shows strong performance across multiple benchmarks, even surpassing some task-specific models [Details].

Huawei announced the open-sourcing of its Pangu dense model with 7 billion parameters, the Pangu Pro MoE (Mixture-of-Experts) model with 72 billion parameters, and its model inference technology based on Ascend, which serves as the platform for AI infrastructure [Details].

Amazon launched DeepFleet, a new AI foundation model to power its robotic fleet and deploys its 1 millionth robot [Details].

Cursor launched ‘Cursor Agents’ on web and mobile. Just like the agent that works in the IDE, agents on web and mobile can write code, answer complex questions, and scaffold out your work [Details].

Google is rolling out a Gemini Live update, enabling the AI assistant to connect with Google Maps, Calendar, Keep, and Tasks [Details].

Ai2 introduced SciArena, an open and collaborative platform for evaluating Foundation Models in scientific literature task [Details].

🔦 🔍 Weekly Spotlight

Articles/Courses/Videos:

Design Patterns for Securing LLM Agents against Prompt Injections

The New Skill in AI is Not Prompting, It's Context Engineering

Context Engineering - What it is, and techniques to consider

How to build Agentic research workflows using the OpenAI Deep Research API and the OpenAI Agents SDK - OpenAI cookbook

Open-Source Projects:

Genesys (Genetic discovery system) by Ai2: a distributed evolutionary system that uses LLM agents to discover better LLMs.

ScreenSuite by Hugging Face: A comprehensive benchmarking suite for evaluating Graphical User Interface (GUI) agents (i.e. agents that act on your screen) across areas of ability : perception, single-step and multi-step agentic behaviour.

C.O.R.E (Contextual Observation & Recall Engine): a shareable memory for LLMs which is private and portable.

🔍 🛠️ Product Picks of the Week

String by Pipedream: Prompt, run, edit, and deploy AI agents in seconds.

AutoCoder: Integrated front-end and back-end VibeCode generation tool, without needing Supabase.

Co-STORM by Stanford University: Get a Wikipedia-like report on your topic with AI

Magic Animator: Animate your designs in seconds with AI.

Last Issue

Mercury chat diffusion LLM, Gemini CLI, Magenta RT, Hunyuan-A13B, Multimodal Reflection, OmniGen2, Matrix-Game, Warp 2.0, Anthropic's Desktop Extensions, Hailuo & HeyGen's video agents and more

Hey there! Welcome back to AI Brews - a concise roundup of this week's major developments in AI.

Thanks for reading and have a nice weekend! 🎉 Mariam.