Calude 3 Opus, Train a 70b language model at home, Firewall for AI, Fast 3D Object Generation from Single Images, multimodal foundation model for any-to-any search tasks, and more

Hi. Welcome to this week's AI Brews for a concise roundup of the week's major developments in AI.

In today’s issue (Issue #54 ):

AI Pulse: Weekly News & Insights at a Glance

AI Toolbox: Product Picks of the Week

AI Skillset: Learn & Build

🗞️🗞️ AI Pulse: Weekly News & Insights at a Glance

🔥 News

Anthropic introduced the next generation of Claude: Claude 3 model family. It includes Opus, Sonnet and Haiku models. Opus is the most intelligent model, that outperforms GPT-4 and Gemini 1.0 Ultra on most of the common evaluation benchmarks. Haiku is the fastest, most compact model for near-instant responsiveness. The Claude 3 models have vision capabilities, offer a 200K context window capable of accepting inputs exceeding 1 million tokens, improved accuracy and fewer refusals [Details | Model Card].

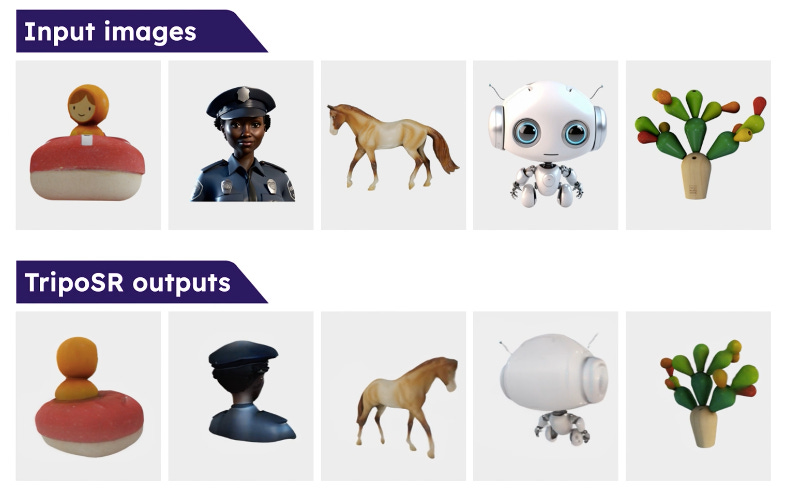

Stability AI partnered with Tripo AI and released TripoSR, a fast 3D object reconstruction model that can generate high-quality 3D models from a single image in under a second. The model weights and source code are available under the MIT license, allowing commercialized use. [Details | GitHub | Hugging Face].

Answer.AI released a fully open source system that, for the first time, can efficiently train a 70b large language model on a regular desktop computer with two or more standard gaming GPUs. It combines QLoRA with Meta’s FSDP, which shards large models across multiple GPUs [Details].

Inflection launched Inflection-2.5, an upgrade to their model powering Pi, Inflection’s empathetic and supportive companion chatbot. Inflection-2.5 approaches GPT-4’s performance, but used only 40% of the amount of compute for training. Pi is also now available on Apple Messages [Details].

Twelve Labs introduced Marengo-2.6, a new state-of-the-art (SOTA) multimodal foundation model capable of performing any-to-any search tasks, including Text-To-Video, Text-To-Image, Text-To-Audio, Audio-To-Video, Image-To-Video, and more [Details].

Cloudflare announced the development of Firewall for AI, a protection layer that can be deployed in front of Large Language Models (LLMs), hosted on the Cloudflare Workers AI platform or models hosted on any other third party infrastructure, to identify abuses before they reach the models [Details]

Scale AI, in partnership with the Center for AI Safety, released WMDP (Weapons of Mass Destruction Proxy): an open-source evaluation benchmark of 4,157 multiple-choice questions that serve as a proxy measurement of LLM’s risky knowledge in biosecurity, cybersecurity, and chemical security [Details].

Midjourney launched v6 turbo mode to generate images at 3.5x the speed (for 2x the cost). Just type /turbo [Link].

Moondream.ai released moondream 2 - a small 1.8B parameters, open-source, vision language model designed to run efficiently on edge devices. It was initialized using Phi-1.5 and SigLIP, and trained primarily on synthetic data generated by Mixtral. Code and weights are released under the Apache 2.0 license, which permits commercial use [Details].

Vercel released Vercel AI SDK 3.0. Developers can now associate LLM responses to streaming React Server Components [Details].

Nous Research released a new model designed exclusively to create instructions from raw-text corpuses, Genstruct 7B. This enables the creation of new, partially synthetic instruction finetuning datasets from any raw-text corpus [Details].

01.AI open-sources Yi-9B, one of the top performers among a range of similar-sized open-source models excelling in code, math, common-sense reasoning, and reading comprehension [Details].

Accenture to acquire Udacity to build a learning platform focused on AI [Details].

China Offers ‘Computing Vouchers’ upto $280,000 to Small AI Startups to train and run large language models [Details].

Snowflake and Mistral have partnered to make Mistral AI’s newest and most powerful model, Mistral Large, available in the Snowflake Data Cloud [Details]

OpenAI rolled out ‘Read Aloud’ feature for ChatGPT, enabling ChatGPT to read its answers out loud. Read Aloud can speak 37 languages but will auto-detect the language of the text it’s reading [Details].

🔦 Weekly Spotlight

Prompt Library by Dr. Ethan Mollick, Associate Professor at the Wharton School of the University of Pennsylvania [Link].

Whomane: Open-Source AI Wearable with a Camera [Link].

Stable Diffusion 3 Research Paper [Link].

New short course on DeepLearning.ai: Open Source Models with Hugging Face [Details].

Fun story from internal testing on Claude 3 Opus when running the needle-in-the-haystack eval [Link].

Open-Sora Plan: a project that aims to create a simple and scalable repo, to reproduce Sora [Link].

Claude 3's system prompt by Alignment Researcher at Anthropic [Link].

South Park Commons X OpenAI Hackathon winners and finalists [Link].

🔍 🛠️ AI Toolbox: Product Picks of the Week

BuildShip: Visually build workflows, automations and powerful backend logic for your apps, powered by AI.

Zapier Central: An experimental AI workspace where you can teach bots to work across 6,000+ apps

RenderNet: Generate consistent characters. Use source photos to set composition, outlines, character poses, and patterns in your generations.

Haiper: an AI video-generation tool released by DeepMind alums. You can only generate a two-second HD video and slightly lower-quality video of up to four seconds.

Thanks for reading and have a nice weekend! 🎉 Mariam.