Gemini 2.5 Pro, Qwen2.5-Omni, GPT-4o with native image generation, Reve Image 1.0, Anthropic's AI microscope, first real-time speech-to-speech VSM, Ideogram 3.0 and more

Gemini 2.5 Pro, Qwen2.5-Omni, GPT-4o with native image generation, Reve Image 1.0, Anthropic's AI microscope, first real-time speech-to-speech VSM, Ideogram 3.0 and more

Hey there! Welcome back to AI Brews - a concise roundup of this week's major developments in AI.

In today’s issue (Issue #98 ):

AI Pulse: Weekly News at a Glance

Weekly Spotlight: Noteworthy Reads and Open-source Projects

AI Toolbox: Product Picks of the Week

🗞️🗞️ AI Pulse: Weekly News at a Glance

Google released Gemini 2.5 Pro experimental version, a state-of-the-art thinking model on a wide range of benchmarks and debuts at #1 on LMArena by a significant margin. 2.5 Pro also shows strong reasoning and code capabilities, leading on common coding, math and science benchmarks. Gemini 2.5 Pro is available now in Google AI Studio and in the Gemini app for Gemini Advanced users, and will be coming to Vertex AI soon [Details].

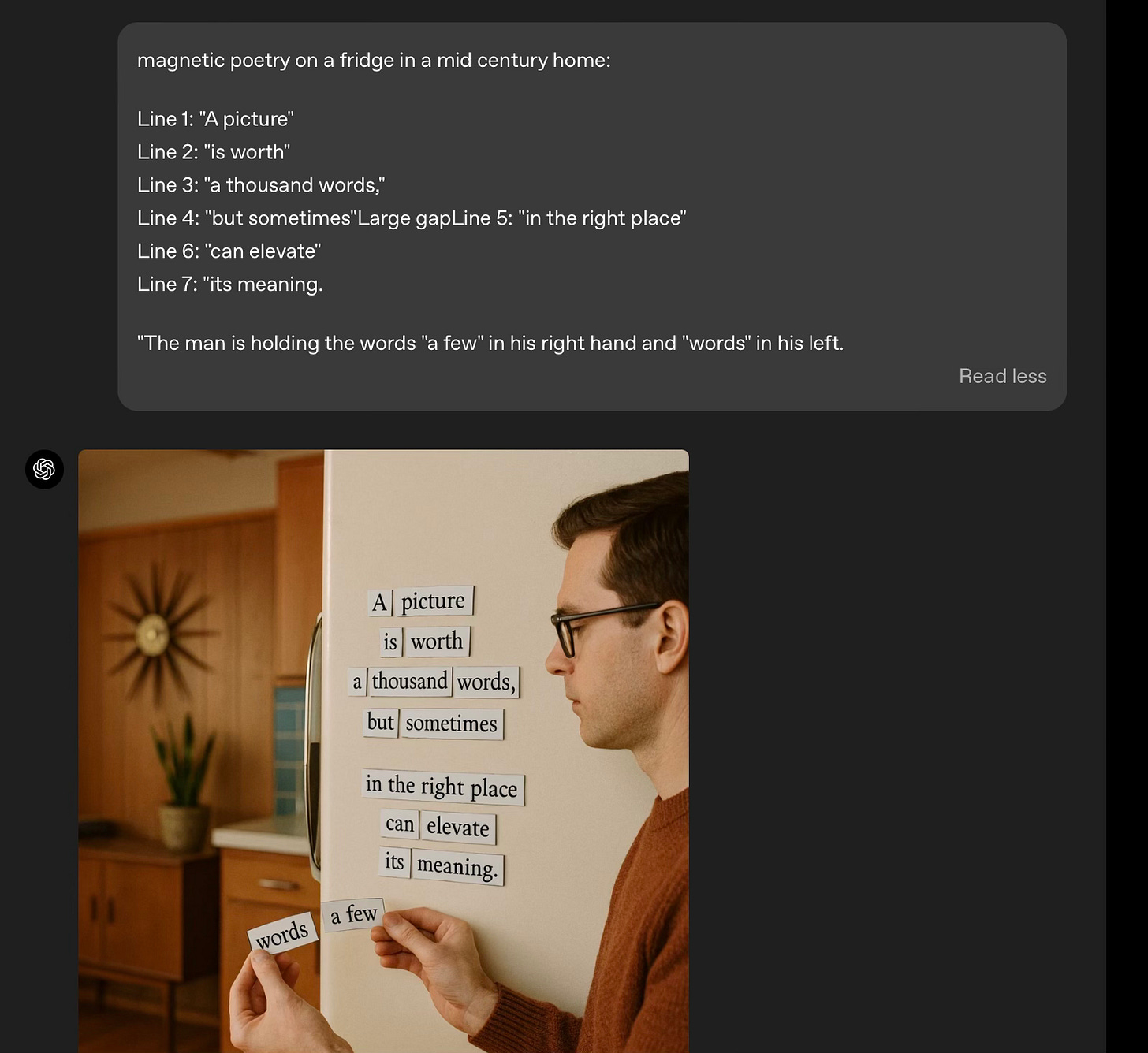

Open AI released GPT-4o with native image generation. It excels at accurately rendering text, precisely following complex prompts, and multi-turn generation. 4o image generation is rolling out to Plus, Pro, Team, and Free users as the default image generator in ChatGPT, with access coming soon to Enterprise and Edu. It’s also available to use in Sora [Details].

Reve AI, a startup founded by the former VP of Product at Stability and Adobe alumni introduced Reve Image 1.0, a new text-to-image model that has topped the Artificial Analysis leaderboard, beating the top image models like Recraft V3, Google’s Imagen 3, Midjourney v6.1, and Black Forest Lab’s FLUX.1.1 [pro]. You can try it for free [Details].

Ideogram introduced Ideogram 3.0 image model with significant improvements in image-prompt alignment, photorealism, and text rendering quality. In human evaluations, it outperformed Google’s Imagen 3, Flux Pro 1.1, and Recraft V3 [Details].

Owen released:

Qwen2.5-Omni, the new flagship end-to-end multimodal model in the Qwen series. This is an omni model, which is a single model that can understand text, audio, image, and video, and output text and audio. Qwen2.5-Omni-7B, is released under the license of Apache 2.0 [Details].

QVQ-Max: the first version of its visual reasoning model. This model can not only “understand” the content in images and videos but also analyze and reason with this information to provide solutions [Details].

Qwen2.5-VL-32B-Instruct. Compared to the previously released Qwen2.5-VL series models, this 32B VL model shows significant improvement in mathematical reasoning and enhanced accuracy and detailed analysis in tasks such as image parsing, content recognition, and visual logic deduction [Details]

Anthropic has introduced a new research tool to explore the internal workings of LLMs and understand how these models process information [Details].

Kyutai released MoshiVis, the first real-time, speech-to-speech Vision Speech Model (VSM), allowing for seamlessly discussing images with Moshi. It is an open-weights model released under Apache 2.0 [Details].

OpenAI will add support for Anthropic’s Model Context Protocol (MCP), across its products - available now in the agents SDK with support for ChatGPT desktop app and responses API coming soon [Details].

DeepSeek released an updated version of its V3 model, DeepSeek-V3-0324, with major improvements in reasoning performance, front-end development skills and tool-use capabilities. It became the highest scoring non-reasoning model in Artificial Analysis Intelligence Index, making it the first time for an open weights model [Details].

Claude can finally search the web now to provide more up-to-date and relevant responses [Details].

Open AI launched new speech-to-text and text-to-speech audio models in the API. This includes gpt-4o-mini-tts model that can be instructed on not just what to say but how to say it. New speech-to-text models include gpt-4o-transcribe and gpt-4o-mini-transcribe with improvements to word error rate and better language recognition and accuracy, compared to the original Whisper models [Details].

Cursor now lets you create Custom Modes, which allows you to compose new modes with tools and prompts that fits your workflow [Details].

Baidu launched two new foundation models: ERNIE 4.5 native multimodal foundation model and ERNIE X1, a multimodal deep-thinking reasoning model capable of tool use. ERNIE 4.5 outperforms GPT-4.5 in multiple benchmarks while priced at just 1% of GPT-4.5. [Details].

Stability AI introduced Stable Virtual Camera, in research preview. This multi-view diffusion model transforms 2D images into immersive 3D videos with realistic depth and perspective—without complex reconstruction or scene-specific optimization [Details].

Mistral released Mistral Small 3.1 under an Apache 2.0 license. It comes with improved text performance, multimodal understanding, and an expanded context window of up to 128k tokens. The model outperforms comparable models like Gemma 3 and GPT-4o Mini, while delivering inference speeds of 150 tokens per second [Details].

Roblox released Cube 3D, an open-source model that generates 3D models and environments directly from text and, in the future, image inputs [Details].

Nvidia introduced a portfolio of technologies to supercharge humanoid robot development, including Nvidia Isaac GR00T N1, the world’s first open, fully customizable foundation model for generalized humanoid reasoning and skills [Details].

Google added two new features to its Gemini application: Canvas and Audio Overviews. Canvas, is an interactive space for refining your documents and code and Audio Overviews transform your files into engaging podcast-style discussions. NotebookLM now supports creating mind maps [Details].

🔦 Weekly Spotlight: Noteworthy Reads and Open-source Projects

Vibe Coding 101 with Replit - free short course on DeepLearning.ai [Details].

State of Voice AI2025 Report - Deepgram’s 2025 State of Voice AI Survey, conducted in partnership with Opus Research [Details].

Anthropic Economic Index: Insights from Claude 3.7 Sonnet [Details].

‘Studio Ghibli’ AI image trend overwhelms OpenAI’s new GPT-4o feature, delaying free tier [Details].

Agno’s Agent UI is now open source [Details].

ByteDance’s InfiniteYou: Flexible Photo Recrafting While Preserving Your Identity [Details].

Gemma 3 Technical Report [Details].

🔍 🛠️ AI Toolbox: Product Picks of the Week

OpenAI.fm: A playground by OpenAI to try the new text-to-speech model

Incymo.ai: An AI-driven platform automating gameplay video ad creation and analysis for games

Marko by Nex: The AI agent for brand marketing that can create targeted, product-consistent photos and videos

Zapier MCP: Connect your AI to any app with Zapier MCP. It gives your AI assistant direct access to over 8,000+ apps and 30,000+ actions without complex API integrations.

Freepik AI Video Upscaler: Upscale your videos up to 4K in one click; powered by Topaz AI

SimplA: A single platform for building, deploying, and managing Agentic AI in any environment - on-prem, cloud, or hybrid.

Last week’s issue

All-in-one model for video creation & editing, DeepWork in Proxy, Gemini Robotics, Gemma 3, Native image generation in Gemini 2.0 Flash, Reka Flash 3, Command A, Figma to Bolt, AgentExchange and more

Hey there! Welcome back to AI Brews - a concise roundup of this week's major developments in AI.

Thanks for reading and have a nice weekend! 🎉 Mariam.