Google's reasoning model, New open-source physics AI engine, Odyssey's Explorer, OmniAudio, AI phone calling, FACTS Leaderboard, Meta Apollo, Global Talent Network, Falcon 3, Veo 2,DeepSeek-VL2 & more

Google's reasoning model, New open-source physics AI engine, Odyssey's Explorer, OmniAudio, AI phone calling, FACTS Leaderboard, Meta Apollo, Global Talent Network, Falcon 3,Veo 2,DeepSeek-VL2 & more

Hi. Welcome to this week's AI Brews for a concise roundup of the week's major developments in AI.

In today’s issue (Issue #87 ):

AI Pulse: Weekly News & Insights at a Glance

AI Toolbox: Product Picks of the Week

🗞️🗞️ AI Pulse: Weekly News & Insights at a Glance

🔥 News

Google released Gemini Flash Thinking (Google's version of o1), an experimental model that explicitly shows its thoughts. Built on 2.0 Flash’s speed and performance, this model is trained to use thoughts to strengthen its reasoning. It tops across all categories on Chatbot Arena leaderboard and is available in Google AI Studio and the Gemini API [Details].

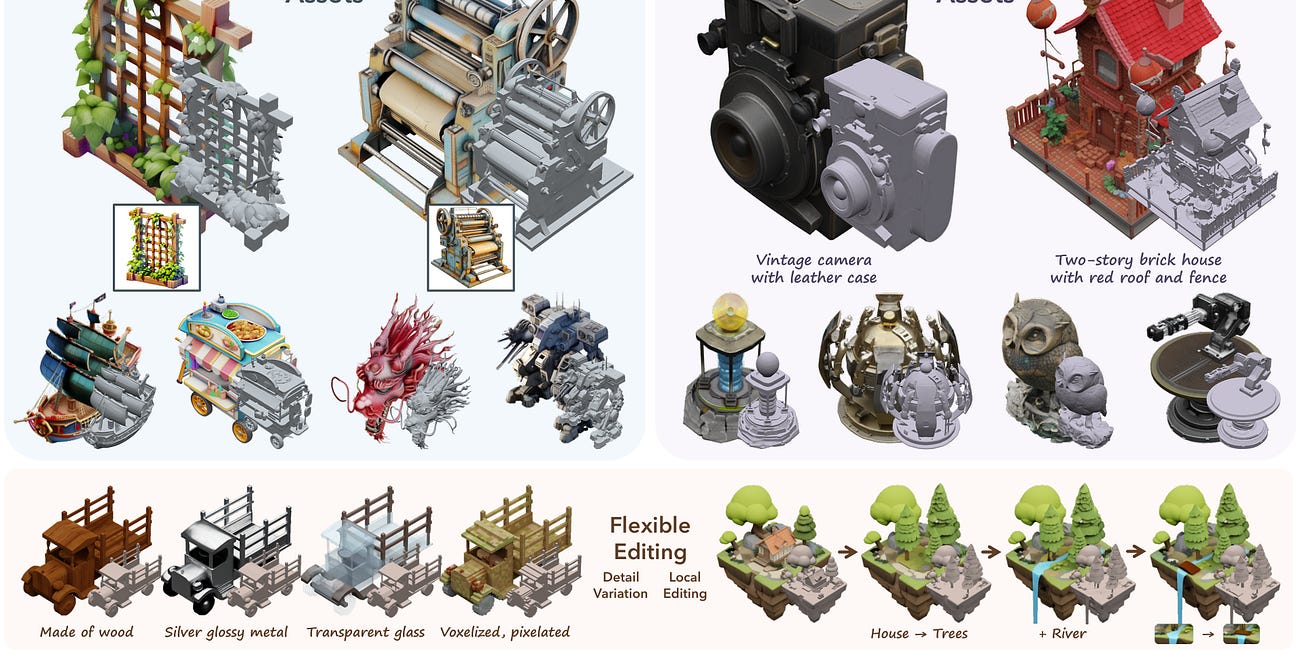

Odyssey introduced Explorer, an image-to-world model that transforms any image into a realized, detailed 3D world. Explorer is particularly tuned for generating photorealistic worlds [Details].

Genesis, an open-source comprehensive physics simulation platform designed for general purpose Robotics, Embodied AI, & Physical AI applications, released. Project Genesis is research collaboration of ~20 research labs. Genesis's generative physics engine can generate 4D dynamical worlds powered by a physics simulation platform [Details]

Meta released Apollo, a new family of open-source Large Multimodal Models that can can perceive hour-long videos efficiently. Apollo-7B is state-of-the-art compared to 7B LMMs with a 70.9 on MLVU, and 63.3 on Video-MME [Details]

Cohere released Command R7B, the smallest, fastest, and final model in their R series of enterprise-focused large language models (LLMs) that can be served on low-end GPUs, a MacBook, or even CPUs [Details]

TII released Falcon 3 family of Open Foundation Models ranging from 1B to 10B parameters including 7B Mamba. 1Falcon 3 was trained on 4 Trillions tokens and 4 main languages (English, Spanish, Portuguese and French) [Details].

GitHub now offers new free tier for GitHub Copilot in VS Code [Details].

More updates from OpenAI as part of ‘12 days of OpenAI’. ChatGPT can now work directly with more coding and note-taking apps—through voice or text—on macOS. Users in US and Canada can talk to ChatGPT by calling 1-800-ChatGPT (1-800-242-8478), while other regions can send a WhatsApp message to the same number. o1 out of preview in the API, updates to the Realtime API, new way of fine tuning, ChatGPT search now available to free users, ‘Projects’ to organize ChatGPT chats and more [Details].

Next AI released OmniAudio, a fast audio-language model for on-device deployment. It’s a 2.6B parameter open-source multimodal model that processes both text and audio inputs. It integrates three components: Gemma-2-2b, Whisper turbo, and a custom projector module, enabling secure, responsive audio-text processing directly on edge devices. Unlike traditional approaches that chain ASR and LLM models together, OmniAudio-2.6B unifies both capabilities in a single efficient architecture for minimal latency and resource overhead [Details].

Google released updated versions of its video and image generation models, Veo 2 and Imagen 3 , and a new tool called Whisk. Veo 2 generates videos at resolutions up to 4K with improved physics simulation and understanding of cinematography. Imagen 3 produces brighter, better composed images with more diverse art styles. These models are being rolled out in Google Labs tools, VideoFX and ImageFX. Whisk lets you input or create images that convey the subject, scene and style you have in mind. [Details].

Runway launched Runway Talent Network, a media platform for creatives to showcase and discover creative work [Details].

DeepSeek released DeepSeek-VL2, an advanced series of large Mixture-of-Experts (MoE) Vision-Language Models that significantly improves upon its predecessor, DeepSeek-VL. Includes three variants: DeepSeek-VL2-Tiny, DeepSeek-VL2-Small and DeepSeek-VL2, with 1.0B, 2.8B and 4.5B activated parameters respectively. DeepSeek-VL2 achieves competitive or state-of-the-art performance with similar or fewer activated parameters compared to existing open-source dense and MoE-based models [Details].

Nvidia introduced Jetson Orin Nano Super, a new compact generative AI supercomputer for edge devices at $249 [Details].

IBM released IBM Granite 3.1, an update to their Granite open-source LLM family with longer context, improved performance, function calling and new embedding models [Details].

Pika launched Pika 2.0 model with superior text alignment and ‘Scene Ingredients’ feature which allows you to upload images of people, objects and places to create customised video scenes [Details].

xAI is rolling out a new version of Grok-2, which is three times faster and offers improved accuracy, instruction-following, and multi-lingual capabilities, to all users on X for free. X has also given Grok a new AI image generator model “Aurora”, available in a new “Grok 2 + Aurora beta” option in the Grok model selector [Details].

NotebookLM, Google’s AI-powered research assistant, has a new product interface and features. You can now interact with AI hosts during Audio Overviews and manage content in a more intuitive way [Details].

Google Deepmind introduced FACTS Grounding, a comprehensive benchmark for evaluating the factuality of large language models and FACTS Leaderboard [Details].

Anthropic made new features generally available in the Anthropic API including prompt caching, PDF support, message batches and more [Details].

Midjourney introduced ‘Moodboards’, which lets you personalize the models using collections of images [Details].

Eleven Labs introduced Eleven Flash v2.5, a new speech synthesis model, designed for real-time applications and conversational AI. It generates speech with ultra-low latency (~75ms) across 32 languages [Details].

🔦 Weekly Spotlight

GraphRAG project by Microsoft: a data pipeline and transformation suite that is designed to extract meaningful, structured data from unstructured text using the power of LLMs [Link].

Building effective agents - by Anthropic [Link]

OpenAI Build Hour: On-Demand Library - Build Hours are a monthly live event for founders and developers to get specific ideas for how to use OpenAI’s APIs and Models [Link].

Qwen2.5 Technical Report [Link].

bolt.diy: the official open source version of Bolt.new , which allows you to choose the LLM that you use for each prompt to build full stack web apps [Link]

Amurex: an open-source AI meeting assistant that seamlessly integrates into your workflow [Link]

Google’s LearnLM outperformed other AI models in a recent technical study [Link]

Inferable: an open source platform that helps you build reliable LLM-powered agentic automations at scale [Link].

🔍 🛠️ AI Toolbox: Product Picks of the Week

Animate AI: All-in-one AI video generator for animation video series.

Tempo Labs: Visual editor for React, powered by AI

TemPolor: AI-powered music platform for content creators

Magnific AI’s Super Real: Realistic image generation specially for professionals (architecture, interior design, films, photography, etc).

tldraw computer: an experimental project by tldraw. Create AI-powered workflows and explore natural-language computer on tldraw's canvas.

Last week’s issue

Meta Motivo behavioral foundation model, Multimodal AI Agents, Gemini 2.0 Flash, Real-time Video and screen-sharing in the Multimodal Live API and ChatGPT, Phi-4, Sora, TRELLIS, Deep Research & more

Hi. Welcome to this week's AI Brews for a concise roundup of the week's major developments in AI.

Thanks for reading and have a nice weekend! 🎉 Mariam.

Explorer sounds awesome. It wasn't on my radar, so thanks for bringing it to my attention. I'll have to add it to the "AI Research" chapter of the upcoming Sunday Rundown.