Grok Code Fast 1, nano-banana, gpt-realtime, Wan2.2-S2V, ByteDance's USO, OLMoASR by Ai2, HunyuanVideo-Foley, Hermes 4, MAI-Voice-1, VibeVoice and more

Grok Code Fast 1, nano-banana, gpt-realtime, Wan2.2-S2V, ByteDance's USO, OLMoASR by Ai2, HunyuanVideo-Foley, Hermes 4, MAI-Voice-1, VibeVoice and more

Hey there! Welcome back to AI Brews - a concise roundup of this week's major developments in AI.

In today’s issue (Issue #111):

AI Pulse: Weekly News at a Glance

Weekly Spotlight: Noteworthy Reads and Open-source Projects

AI Toolbox: Product Picks of the Week

From our sponsors:

Make sense of what matters in AI in minutes —minus the clutter

There’s so much AI hype going around, it’s overwhelming to keep up.

… And that’s assuming you’re following the stuff that actually matters.

Fortunately, there's The Deep View, a newsletter that sifts through all the AI-related noise for you.

It gives you 5-minute insights on what truly matters right now in AI, and it’s trusted by over 452,000 subscribers, including executives at Microsoft, Scale, and Coinbase.

Sign up now for free and start making smarter decisions in the AI space.

🗞️🗞️ Weekly News at a Glance

xAI introduced Grok Code Fast 1 (codename sonic), a speedy and economical reasoning model built from scratch, that excels at agentic coding. For the next 7 days, Grok Code Fast 1 is available for free on popular agentic coding platforms including Cursor, GitHub Copilot, Cline, opencode, Windsurf, Roo Code, and Kilo Code [Details].

Google unveiled Gemini 2.5 Flash Image (aka nano-banana), a state-of-the-art image generation and editing model. It ranks #1 on LMArena’s Image Edit Leaderboard with a score of 1362. It enables you to blend multiple images into a single image, maintain character consistency for rich storytelling, make targeted transformations using natural language, and use Gemini's world knowledge to generate and edit images [Details].

Tongyi Lab, Alibaba released Wan2.2-S2V, an open-source 14B parameter model video generation model that takes a single image and audio input to generate high-quality, synchronized video content. It excels in film and television application scenarios, capable of presenting realistic visual effects, including generating natural facial expressions, body movements, and professional camera work. It supports both full-body and half-body character generation, and can high-quality complete various professional-level content creation needs such as dialogue, singing, and performance [Details].

OpenAI released gpt-realtime, a more advanced speech-to-speech model that shows improvements in following complex instructions, calling tools with precision, and producing speech that sounds more natural and expressive. The Realtime API is out of beta and now supports remote MCP servers, image inputs, and phone calling through Session Initiation Protocol (SIP) [Details].

Ai2 released OLMoASR, a family of completely open automatic speech recognition (ASR) models trained from scratch on a curated, large-scale dataset. The models match or exceed Whisper’s zero-shot performance across most scales [Details].

Microsoft AI (MAI) introduced two models: MAI-Voice-1 and MAI-1-preview. MAI-Voice-1 is a lightning-fast expressive and natural speech generation model, with an ability to generate a full minute of audio in under a second on a single GPU. It’s already powering Copilot Daily and Podcasts, and is available as a new Copilot Labs experience to try out here. MAI-1-preview is a mixture-of-experts model currently under public testing on LMArena [Details]

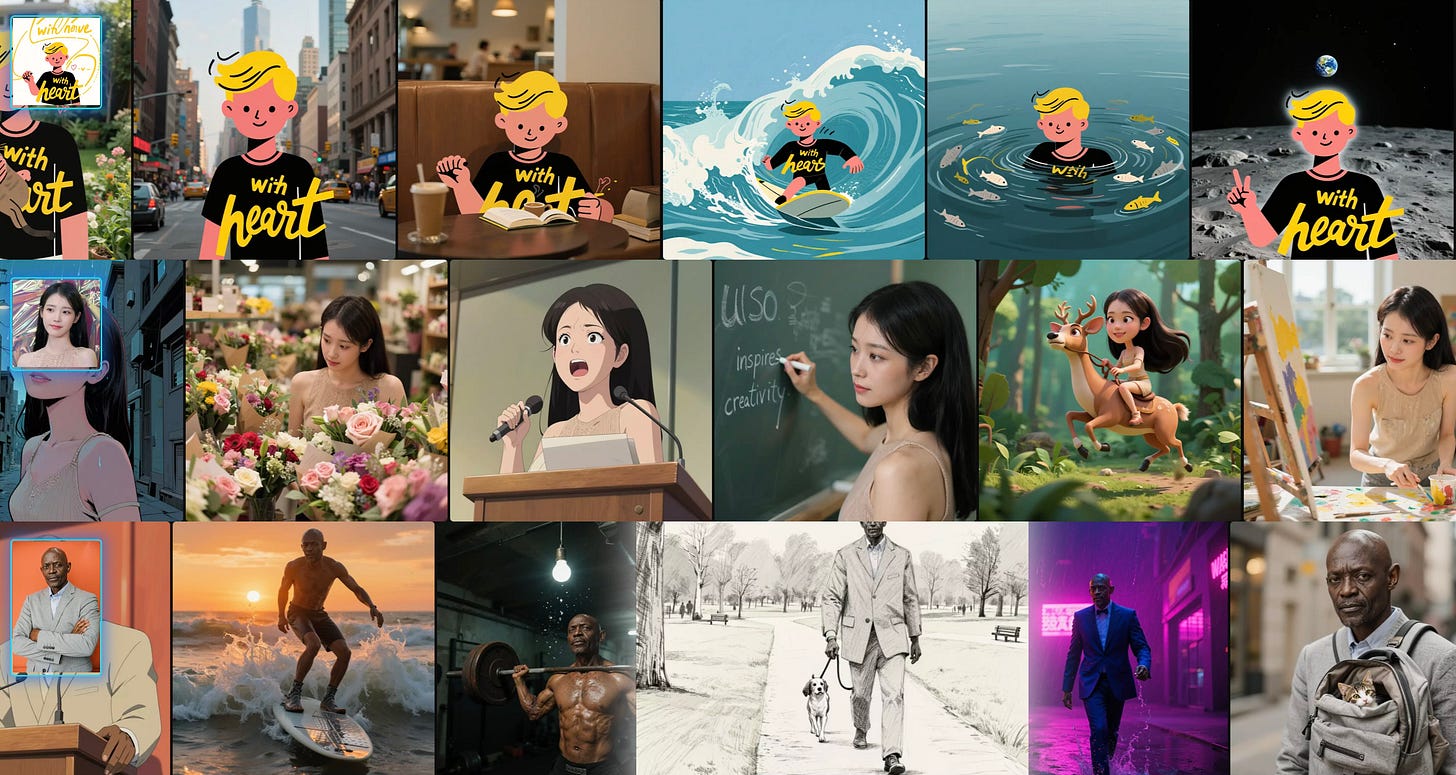

ByteDance released USO, a unified style-subject optimized customization model. USO can freely combine any subjects with any styles in any scenarios, delivering outputs with high subject/identity consistency and strong style fidelity while ensuring natural, non-plastic portraits. The project is released under the Apache 2.0 License [Details |Demo].

Tencent Hunyuan released HunyuanVideo-Foley, a new open-source end-to-end Text-Video-to-Audio (TV2A) framework for generating high-fidelity audio. Trained on a massive 100k-hour multimodal dataset, the model generates contextually-aware soundscapes for a wide range of scenes, from natural landscapes to animated shorts. HunyuanVideo-Foley achieves SOTA on multiple benchmarks, surpassing all open-source models in audio quality, visual-semantic alignment, and temporal alignment [Details].

Nous Research released Hermes 4, a family of neutrally-aligned generalist open-weights models that combine self-reflective reasoning with broad instructional competence. Unlike models constrained by corporate ethics codes, Hermes 4 is designed to follow the user’s needs and system prompts, without lecturing and sycophancy [Details].

Microsoft released VibeVoice, a frontier open-source Text-to-Speech model that can synthesize speech up to 90 minutes long with up to 4 distinct speakers, surpassing the typical 1-2 speaker limits of many prior models [Details].

Anthropic launched Claude for Chrome, an experimental browser extension for Max plan users that lets Claude interact with web pages while enforcing safety measures to reduce prompt injection risks [Details].

OpenAI updated Codex with new features including a new IDE extension (available for VS Code, Cursor, and other forks), easily move tasks between the cloud and your local environment, ode reviews in GitHub and a revamped Codex CLI [Details].

Perplexity announced Comet Plus, a new subscription that provides access to premium publisher content and directs 80% of its revenue to those publishers based on user visits, AI citations, and agent actions [Details].

Video Overviews in Google’s NotebookLM will now be available in 80 languages. Audio Overviews, already available in those languages can now be more thorough [Details].

xAI released the model weights for Grok 2.5, its flagship model from last year. According to Elon Musk, Grok 3 will be open-sourced in about six months [Details].

Clouflare’s AI Gateway now gives access to 350+ models across 6 providers, dynamic routing and more through one endpoint [Details].

Baidu launched MuseStreamer 2.0, an image-to-video model with natural voices and ambient sound, multi-character coordination with synchronized lip-sync and lower pricing [Details].

Google Translate app now offers Gemini-powered live translation for real-time conversations with audio and on-screen translations in over 70 languages, plus a new Practice mode for personalized speaking and listening lessons [Details].

🔦 🔍 Weekly Spotlight

Articles/Courses/Videos:

How to build a coding agent: free workshop - Geoffrey Huntley

Make Your Website Conversational for People and Agents with NLWeb and AutoRAG

Agentic Browser Security: Indirect Prompt Injection in Perplexity Comet

The Context Window Problem: Scaling Agents Beyond Token Limits

We Put a Coding Agent in a While Loop and It Shipped 6 Repos Overnight

Open-Source Projects:

SuperClaude Framework: A meta-programming framework for Claude Code that enhances it with 21 slash commands, 14 agents, and 5 behavioral modes.

ContextForge MCP Gateway: Model Context Protocol gateway & proxy - unify REST, MCP, and A2A with federation, virtual servers, retries, security, and an optional admin UI.

🔍 🛠️ Product Picks of the Week

Rube by Composio: a universal MCP that enables you to take actions across 600+ applications from within your AI chat. It manages authentication and tool selection seamlessly and securely within the chat.

Lindy Build: Build web apps using AI. Lindy Build also tests its work by automatically finding and fixing issues.

Stax: An experimental developer tool by Google for AI evaluations.

Disco: A centralized platform for managing AI integrations using the Model Context Protocol. Disco.dev is free in research preview

Last Issue

GPT‑5, Qwen-Image, Skywork UniPic, Genie 3, Grok Imagine, Gemini Storybooks and guided learning, Opus 4.1, gpt-oss-120b and gpt-oss-20b, Eleven Music, Seed Diffusion Preview, Lindy 3.0 and more

Hey there! Welcome back to AI Brews - a concise roundup of this week's major developments in AI.

Thanks for reading and have a nice weekend! 🎉 Mariam.