Molmo, Meta's Vision Models, Next-Token Prediction Multimodal model, AlphaChip, Hundred Film Fund, HuggingChat macOS, Updated models from OpenAI and Google and more

Molmo, Meta's Vision Models, Next-Token Prediction Multimodal model, AlphaChip, Hundred Film Fund, HuggingChat macOS, Updated models from OpenAI and Google and more

Hi. Welcome to this week's AI Brews for a concise roundup of the week's major developments in AI.

In today’s issue (Issue #78 ):

AI Pulse: Weekly News & Insights at a Glance

AI Toolbox: Product Picks of the Week

🗞️🗞️ AI Pulse: Weekly News & Insights at a Glance

🔥 News

Allen AI released Molmo (Multimodal Open Language Model), a new family of state-of-the-art vision-language models (VLMs). The smallest MolmoE-1B nearly matches the performance of GPT-4V on both academic benchmarks and human evaluation. The largest 72B model compares favorably against GPT-4o, Claude 3.5, and Gemini 1.5. Molmo can point at what it sees, enabling rich interactions in both the physical and virtual worlds [Details | video | Demo].

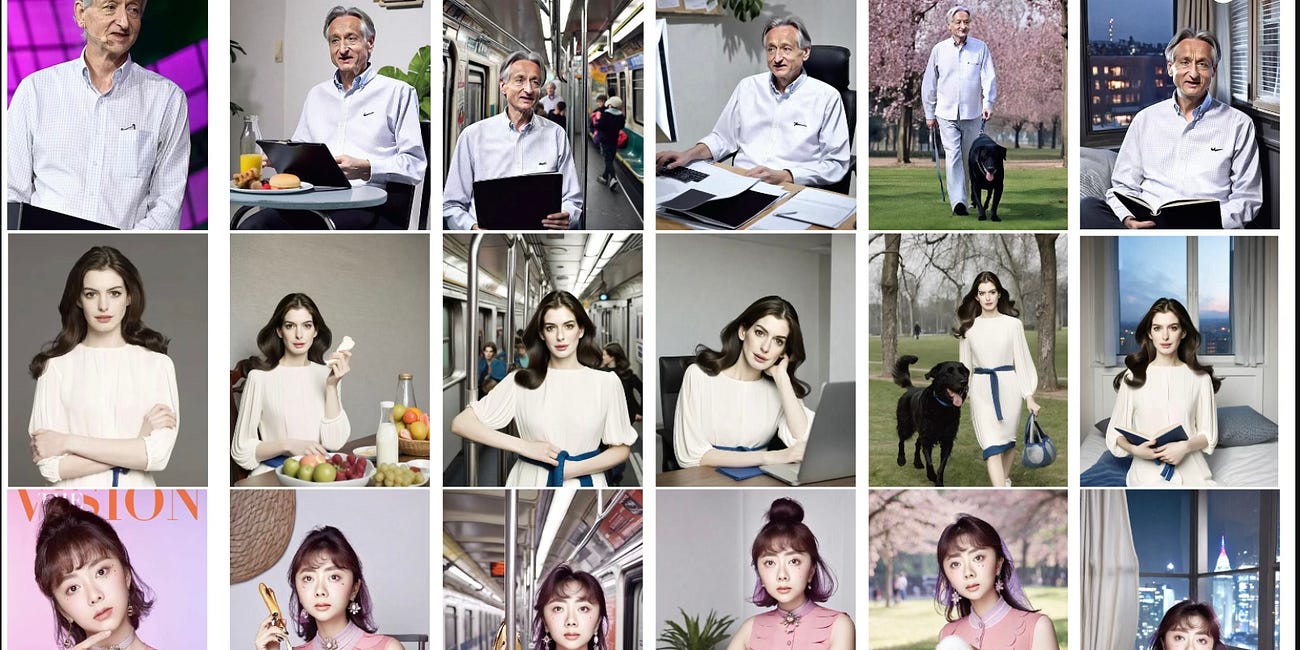

Meta released Llama 3.2 - includes small and medium-sized vision LLMs (11B and 90B), and lightweight, text-only models (1B and 3B) that fit onto edge and mobile devices. The Llama 3.2 vision models are competitive with Claude 3 Haiku and GPT4o-mini on image recognition and a range of visual understanding tasks. The 3B model outperforms the Gemma 2 2.6B and Phi 3.5-mini models on tasks such as following instructions, summarization, prompt rewriting, and tool-use [Details].

Alibaba group presents MIMO, a novel generalizable model which can synthesize character videos with controllable attributes (i.e., character, motion and scene) from simple user inputs. The source code and pre-trained model will be released upon paper acceptance [Details].

BAAI released Emu3, a new suite of state-of-the-art multimodal models trained solely with next-token prediction. Emu3 is trained to predict the next token with a single Transformer on a mix of video, image, and text tokens. With a video in context, Emu3 can naturally extend the video and predict what will happen next. The model can simulate some aspects of the environment, people and animals in the physical world [Details].

Google released two updated production-ready Gemini models: Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002 along with reduced 1.5 Pro pricing, increased rate limits, 2x faster output and 3x lower latency [Details]

OpenAI introduced a new moderation model, omni-moderation-latest, in the Moderation API. Based on GPT-4o, the new model supports both text and image inputs and is more accurate than the previous model, especially in non-English languages [Details].

Meta has introduced new multimodal capabilities for its AI assistant, Meta AI, including voice interactions and photo understanding and editing. Meta is testing new AI features for Reels including automatic video dubbing and lip-syncing [Details].

Hugging Face released HuggingChat macOS - a native macOS app that brings powerful open-source language models straight to desktop - with markdown support, web browsing and code syntax highlighting [Details].

Deepgram unveiled the Deepgram Voice Agent API, a unified voice-to-voice API for AI agents that enables natural-sounding conversations between humans and machines [Details].

Google NotebookLM adds audio and YouTube support, plus easier sharing of Audio Overviews [Details].

Meta released the first official Distribution of Llama Stack that aims to simplify the way developers can build around Llama to support agentic applications and more [Details].

Runway launched The Hundred Film Fund to fund one hundred films which make use of AI to tell their stories. At present, the fund sits at $5M with the potential to grow to $10M [Details]

OpenAI rolls out Advanced Voice Mode with more voices and a new look [Details].

Meta unveiled Orion augmented-reality glasses prototype [Details].

OpenAI is launching the OpenAI Academy, which will invest in developers and organizations leveraging AI to help solve hard problems and catalyze economic growth in their communities [Details].

🔦 Weekly Spotlight

How AlphaChip transformed computer chip design - by Google DeepMind [Link].

PDF2Audio: Convert PDFs into an audio podcast, lecture, summary and others [Link].

napkins.dev: An open source wireframe to app generator. Powered by Llama 3.2 Vision & Together.ai [Link].

Prompt caching through the Anthropic API [Link].

🔍 🛠️ AI Toolbox: Product Picks of the Week

Motion: Automatically plan your day based on your tasks and priorities

Hoody AI: Anonymous access to chat with multiple models at once

Merlin AI: 26-in-one AI assistant to research, create and summarize

Last week’s issue

Qwen 2.5, Seed-Music, StoryMaker, Jina Embeddings V3, Multimodal RAG, Luma Labs and Runway APIs, CogVideoX image-to-video generation model and More

Hi. Welcome to this week's AI Brews for a concise roundup of the week's major developments in AI.

You can support my work via BuyMeaCoffee.

Thanks for reading and have a nice weekend! 🎉 Mariam.