Multi-robot collaboration,Grok 3 , smallest video language model, Generative AI Model for Gameplay, AI co-scientist, Mistral Saba, Fiverr Go, Step-Video-T2V and Step-Audio, Pikaswaps & more

Multi-robot collaboration,Grok 3 , smallest video language model, Generative AI Model for Gameplay, AI co-scientist, Mistral Saba, Fiverr Go, Step-Video-T2V and Step-Audio, Pikaswaps & more

Hey there! Welcome back to AI Brews - a concise roundup of this week's major developments in AI.

In today’s issue (Issue #94):

AI Pulse: Weekly News at a Glance

Weekly Spotlight: Noteworthy Reads and Open-source Projects

AI Toolbox: Product Picks of the Week

🗞️🗞️ AI Pulse: Weekly News at a Glance

xAI unveiled an early preview of Grok 3 reasoning model, which topped the Chatbot Arena leaderboard. Grok 3, with a context window of 1 million tokens, has leading performance across both academic benchmarks and real-world user preferences. The model is available for free now through X and Grok.com [Details].

Figure introduced Helix, a generalist Vision-Language-Action (VLA) model that unifies perception, language understanding, and learned control to overcome multiple longstanding challenges in robotics. Video below shows collaborative grocery storage. A single set of Helix neural network weights runs simultaneously on two robots as they work together to put away groceries neither robot has ever seen before [Details].

Microsoft introduced Muse, the first World and Human Action Model (WHAM). It’s a generative AI model of a video game that can generate game visuals, controller actions, or both. Microsoft is open sourcing the weights and sample data [Details].

Hugging Face released SmolVLM2, lightweight multimodal models designed to analyze video content. The 2.2B model is the go-to choice for vision and video tasks, while the 500M and 256M models represent the smallest video language models ever released [Details].

Google introduced AI co-scientist, a multi-agent AI system built with Gemini 2.0 as a virtual scientific collaborator to help scientists generate novel hypotheses and research proposals [Details].

Phind, the AI search engine has been updated to go beyond text answers to include images, diagrams, interactive widgets, cards, and other rich visual outputs within the answer itself [Details].

StepFun released Step-Video-T2V and Step-Audio. Step-Video-T2V is a state-of-the-art (SoTA) text-to-video pre-trained model with 30 billion parameters and the capability to generate videos up to 204 frames. Step-Audio is a production-ready open-source model family for intelligent and natural speech interaction [Details]

Perplexity AI launched a Deep Research tool that performs dozens of searches, reads hundreds of sources, and reasons through the material to autonomously deliver a comprehensive report. It’s free for up to 5 queries per day for non-subscribers and 500 queries per day for Pro users [Details].

Sakana AI introduced AI CUDA Engineer, the first comprehensive agentic framework for fully automatic CUDA kernel discovery and optimization [Details].

Pika launched Pikaswaps, a new feature to replace anything in your videos using photos you upload, or scenes you describe. Pika also launched an official iOS app [Link].

OpenAI introduced SWE-Lancer, a new benchmark of over 1,400 freelance software engineering tasks from Upwork, valued at $1 million USD total in real-world payouts. Sonnet 3.5 performs best, followed by o1 and then GPT-4o [Details]

Mistral released Mistral Saba, a 24B parameter model trained on curated datasets from across the Middle East and South Asia. It supports Arabic and many Indian-origin languages, and is particularly strong in South Indian-origin languages such as Tamil [Details].

Google released PaliGemma 2 Mix, an upgraded vision-language model in the Gemma family.PaliGemma 2 mix can solve tasks such as short and long captioning, optical character recognition (OCR), image question answering, object detection and segmentation [Details].

Fiverr launched Fiverr Go, to enable freelancers doing voice-over, graphic design, and certain related work the ability to train AI on their content and to charge customers for access [Details].

Meta announced LlamaCon, a developer conference for 2025, exploring the potential of Llama [Details].

LangChain released LangMem SDK, a library that helps your agents learn and improve through long-term memory [Details].

Perplexity AI released R1 1776, a DeepSeek-R1 reasoning model that has been post-trained to remove censorship [Details].

🔦 Weekly Spotlight: Noteworthy Reads and Open-source Projects

The Ultra-Scale Playbook: Training LLMs on GPU Clusters - a free, open-source book by Hugging Face.

OmniParser V2: Turning Any LLM into a Computer Use Agent.

Animate Anyone 2: High-Fidelity Character Image Animation with Environment Affordance.

Arch: an intelligent (edge and LLM) proxy designed for agentic applications - to help you protect, observe, and build agentic tasks by simply connecting (existing) APIs.

Mcp.run: Host, discover, publish, and manage Model Context Protocol servlets for LLMs + agents.

Aide: Open Source AI-native code editor. It is a fork of VS Code, and integrates tightly with the leading agentic framework on swebench-lite

🔍 🛠️ AI Toolbox: Product Picks of the Week

Trupeer: Let AI turn your simple screen recordings into polished videos and detailed guides.

Beatoven.ai: AI composer for crafting background music

MGX (MetaGPT X): AI agent development team by MetaGPT

Proxy 1.0 : AI-powered digital assistant that explores the web and executes tasks through simple conversation.

Last week’s issue

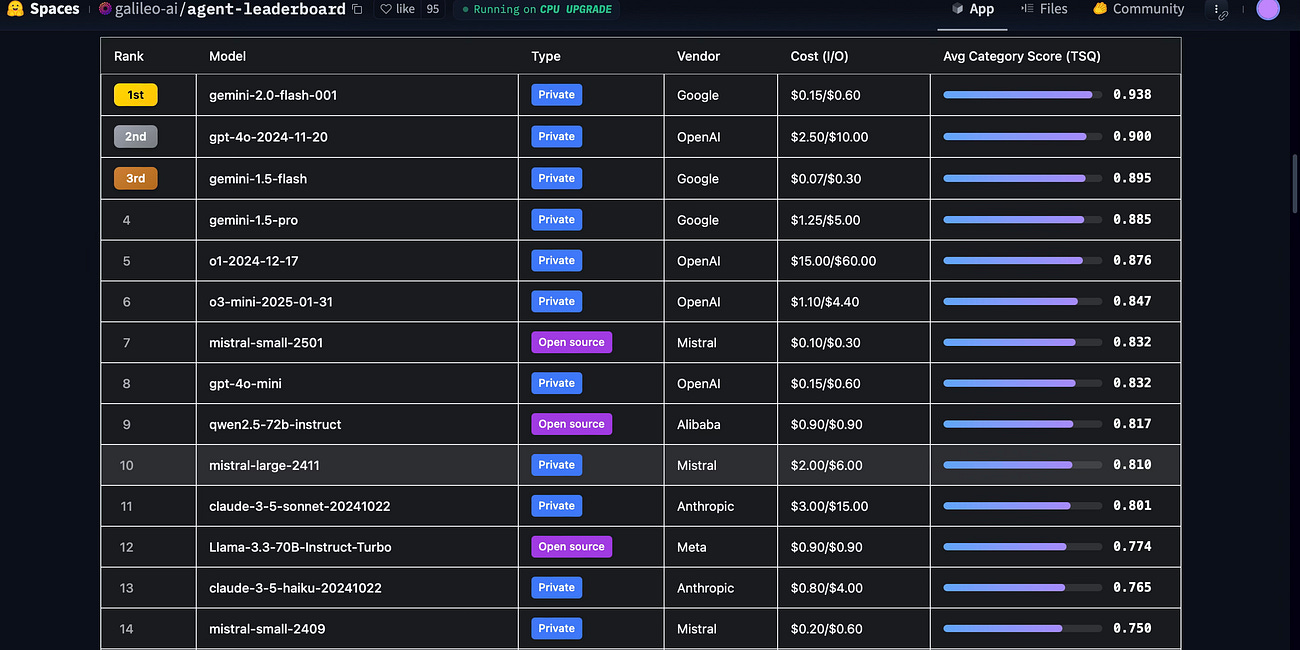

New unified reasoning and intuitive language model, Video Ads Foundation Models, Agent Leaderboard, 1.6B open-source expressive TTS, Mobile App development in Replit and Bolt, and more

Hey there! Welcome back to AI Brews - a concise roundup of this week's major developments in AI.

Thanks for reading and have a nice weekend! 🎉 Mariam.