Ultra-long context, Qwen2-VL outperforms GPT-4o, new open weights Text to Video model, Eagle multimodal large language model, fastest AI inference and more

Ultra-long context, Qwen2-VL outperforms GPT-4o, new open weights Text to Video model, Eagle multimodal large language model, fastest AI inference and more

Hi. Welcome to this week's AI Brews for a concise roundup of the week's major developments in AI.

In today’s issue (Issue #74 ):

AI Pulse: Weekly News & Insights at a Glance

AI Toolbox: Product Picks of the Week

From our partners:

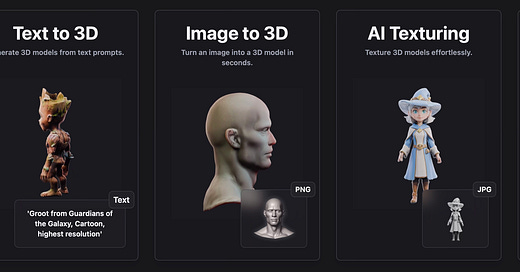

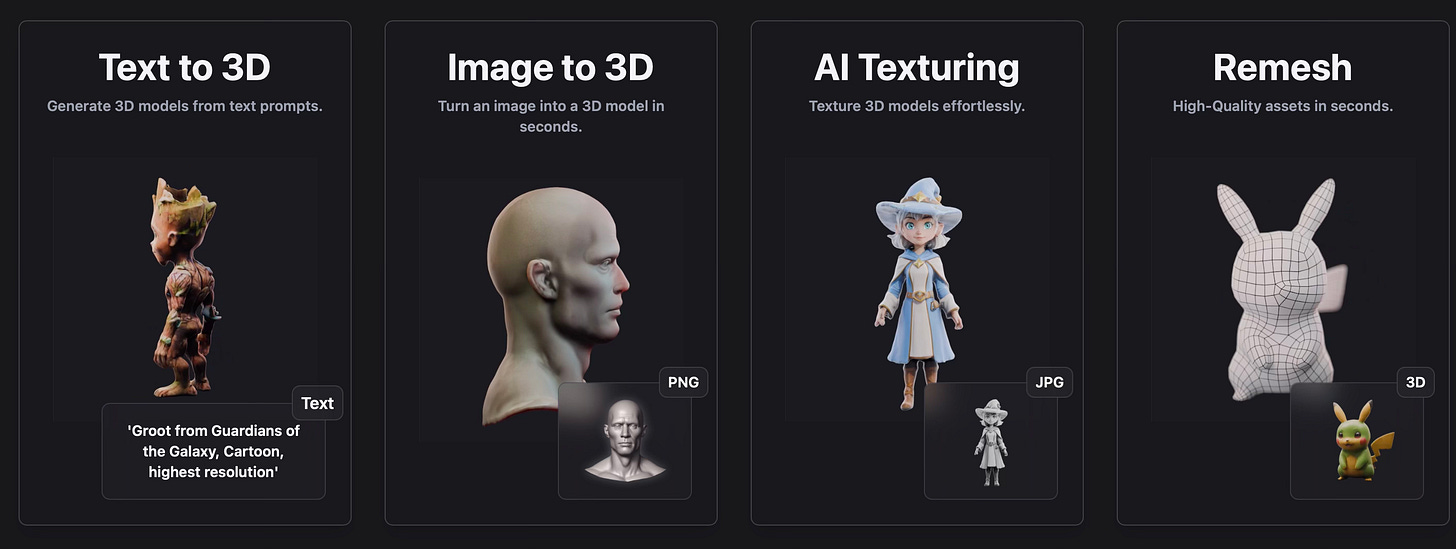

3D AI Studio: Create Stunning 3D Models with AI

🗞️🗞️ AI Pulse: Weekly News & Insights at a Glance

🔥 News

Alibaba Cloud released Qwen2-VL vision language model that achieves state-of-the-art performance on visual understanding benchmarks and supports multi-language text recognition in images. It can understand videos over 20 minutes for video-based question answering, dialog, content creation etc. The 72B model outperforms GPT-4o and Claude 3.5-Sonnet. The open-source Qwen2-VL-2B and Qwen2-VL-7B are under Apache 2.0, while the largest Qwen2-VL-72B, can be accessed via the official API [Details]

Magic has trained a 100M token context model: LTM-2-mini. It is roughly 1000x cheaper than the attention mechanism in Llama 3.1 405B for a 100M token context window. Magic is partnering with Google and NVIDIA to build their next-gen AI supercomputer on Google Cloud [Details].

Zhipu AI and Tsinghua University released CogVideoX-5B, an open weights Text to Video model. It is capable of generating 720×480 videos of six seconds with eight frames per second. The CogVideoX-2B model's open-source license has been changed to the Apache 2.0 License [Details].

Google researchers have reached a major milestone by creating a neural network that can generate real-time gameplay for the classic shooter Doom—without using a traditional game engine. This system, called GameNGen, produces playable gameplay at 20 frames per second on a single chip, with each frame predicted by a diffusion model [Details | Paper]

Playground announced a new graphics design tool powered by their next image foundation model Playground v3 (beta). Available now, it lets you make T-shirts, logos, social media posts, memes etc. [Details]

Nvidia released Eagle, a family of multimodal large language models (MLLMs) with a mixture of vision encoders. It supports up to over 1K input resolution and obtain strong results on multimodal LLM benchmarks, especially resolution-sensitive tasks such as optical character recognition and document understanding [Details].

Zyphra released Zamba2-1.2B, a hybrid model composed of state-space (Mamba) and transformer blocks. It achieves state-of-the-art performance for models under 2B parameters and is competitive with some models of significantly greater size. It offers low inference latency, rapid generation, and a smaller memory footprint compared to similar transformer-based models [Details].

Anthropic has published Claude’s system prompts and has added a new system prompts release notes section to their docs. The system prompt does not affect the API [Details].

Cerebras introduced Cerebras inference that runs Llama3.1 20x faster than GPU solutions at 1/5 the price. At 1,800 tokens/s, Cerebras Inference is 2.4x faster than Groq in Llama3.1-8B. Cerebras inference is open to developers via API access [Details].

Salesforce AI present xGen-VideoSyn-1, a text-to-video (T2V) generation model capable of producing realistic scenes from textual descriptions. It can produce up to 14 seconds of 720p video [Paper]

Google is rolling out three experimental models: A new smaller variant, Gemini 1.5 Flash-8B; a stronger Gemini 1.5 Pro model (better on coding & complex prompts) and an improved Gemini 1.5 Flash model [Details]

Anthropic has made "Artifacts" generally available to all users on their Free, Pro, and Team plans. With Artifacts, you have a dedicated window to instantly see, iterate, and build on the work you create with Claude [Details].

Google has introduced new features for its Gemini AI, including the ability to create custom "Gems"—personalized AI experts for specific tasks—and enhanced image generation capabilities with the latest Imagen 3 model [Details]

Document Upload and Data Analysis are now available for Gemini for Google Workspace add-on subscribers [Details]

🔦 Weekly Spotlight

CommandDash: AI assist to integrate APIs and SDKs without reading docs [Link].

Data and AITrends 2024 - report by Google Cloud [Link].

AgentOps: Python SDK for agent monitoring, LLM cost tracking, benchmarking, and more [Link]

Firecrawl: Turn entire websites into LLM-ready markdown or structured data. Scrape, crawl and extract with a single API [Link]

Groq and Gradio for Realtime Voice-Powered AI Applications [Link].

Claude’s API now supports CORS requests, enabling client-side applications [Link].

🔍 🛠️ AI Toolbox: Product Picks of the Week

Vocal Remover: Separate voice from music out of a song free with AI

3D AI Studio: Generate 3D models, animations and textures in seconds.

Creatify 2.0: AI Video Ad Maker with a new text-to-video feature offering 9 styles including cartoonish, realistic, 3D, and more.

Vozo: Edit, dub, translate, and lip-sync your videos with prompts.

Last week’s issue

Jamba 1.5, Ideogram 2.0, Phi-3.5-MoE, Transfusion, Dream Machine 1.5, Mistral-NeMo-Minitron 8B, fine-tuning for GPT-4o and more

Hi there. AI Brews is back - apologies for the missed issues! Sincere thanks for your messages and support; it means a lot!

You can support my work via BuyMeaCoffee.

Thanks for reading and have a nice weekend! 🎉 Mariam.