Dream Machine, Apple Intelligence, AI to understand animals communication, Mixture of Agents, Real-time Expressive Generative Humans, Skybox AI new model and more

Dream Machine, Apple Intelligence, AI to understand animals communication, Mixture of Agents, Real-time Expressive Generative Humans, Skybox AI new model and more

Hi. Welcome to this week's AI Brews for a concise roundup of the week's major developments in AI.

In today’s issue (Issue #67 ):

AI Pulse: Weekly News & Insights at a Glance

AI Toolbox: Product Picks of the Week

Advertise in this newsletter to reach over 9,000 AI engineers, founders, and enthusiasts with your message.

🗞️🗞️ AI Pulse: Weekly News & Insights at a Glance

🔥 News

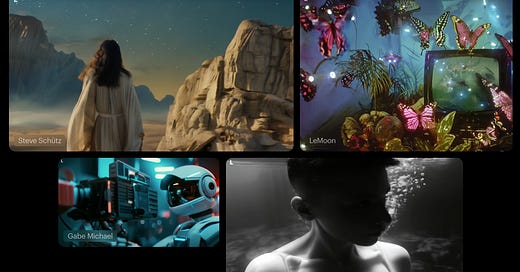

Lume AI introduced Dream Machine - a new video model for creating high quality, realistic 5 seconds shots from text and images with a speed of 120 frames in 120s. It is trained directly on videos and can create videos with character consistency and accurate physics. It’s available to the public [Details].

Apple announced Apple Intelligence at WWDC 2024, its name for a new suite of AI features for the iPhone, Mac, and more. Starting later this year, Apple is rolling out a more conversational Siri, custom, AI-generated “Genmoji,” and GPT-4o access that lets Siri turn to OpenAI’s chatbot for complex queries. The AI features will carry out actions between apps, as well as manage notifications, automatically write things for you, and summarize text in mail and other apps. Apple says its privacy-focused system will first attempt to fulfill AI tasks locally on the device itself. If any data is exchanged with cloud services, it will be encrypted and then deleted afterward. AI features will be available only on the iPhone 15 Pro and 15 Pro Max and iPads or Macs with M1 or later chips [Details].

Apple shares details of the foundation models built into Apple Intelligence that have been fine-tuned for user experiences and taking in-app actions to simplify interactions across apps [Details].

Researchers from MIT, Microsoft and Google introduced DenseAV, a model that can learn language and localize sound in videos without supervision. DenseAV has never seen text, but can learn the meaning of words just by watching unlabeled videos. DenseAV aims to learn language by predicting what it’s seeing from what it’s hearing, and vice-versa. This can be used in understanding new languages, like dolphin or whale communication, which don’t have a written form of communication [Details | Code].

Stability AI released Stable Diffusion 3 Medium, a 2 billion parameter SD3 model that can run on consumer PCs and laptops. The weights are available under an open non-commercial license and a ‘low-cost Creator License’ [Details | Hugging Face]

Suno released a new Audio Input feature, where you can make a song from any sound [Details].

Together AI introduced Mixture of Agents (MoA), a novel approach to harness the collective strengths of multiple LLMs. MoA adopts a layered architecture where each layer comprises several LLM agents. It surpass GPT-4o on AlpacaEval 2.0 using only open source models [Details | GitHub].

Google DeepMind and Harvard built a ‘virtual rodent’ powered by AI. It was trained to mimic the whole-body movements of freely moving rats in a physics simulator [Details]..

Apparate Labs introduced PROTEUS, a low-latency foundation model for generating highly realistic and expressive humans. Apply for developer early access here [Details]

Alibaba Cloud released VideoLLaMA 2, a set of Video Large Language Models (Video-LLMs) designed to enhance spatial-temporal modeling and audio understanding in video and audio-oriented tasks [Details].

Midjourney has launched a new feature ‘Personalization’ that takes note of the kinds of images you prefer and can generate images for you based on your preferences [Details].

Blockade Labs released Skybox AI Model 3.1 built from the ground up with a focus on realism. It can generate 8K, fully seamless, 360° worlds in 30 seconds. [Details]

Flyhomes has acquired ZeroDown, a real estate startup backed by Sam Altman and launched AI-powered home search platform [Details]

Google shares research on how AI can create personalized health experiences that cater to individuals’ unique health journeys. The Personal Health Large Language Model (PH-LLM) is a fine-tuned version of Gemini, designed to generate insights and recommendations to improve personal health behaviors related to sleep and fitness patterns. By using a multimodal encoder, PH-LLM is optimized for both textual understanding and reasoning as well as interpretation of raw time-series sensor data such as heart rate variability and respiratory rate from wearables [Details]

Databricks launched five new Mosaic AI tools: Mosaic AI Agent Framework, Mosaic AI Agent Evaluation, Mosaic AI Tools Catalog, Mosaic AI Model Training and Mosaic AI Gateway [Details]..

Google released RecurrentGemma 9B. RecurrentGemma is a family of open language models built on a novel recurrent architecture. Because of its novel architecture, RecurrentGemma requires less memory than Gemma and achieves faster inference when generating long sequences [Details].

Microsoft announced to scrap GPT Builder in Copilot Pro after just 3 months [Details].

Former Meta engineers launched Jace, an AI agent. By Using their own AWA-1 (Autonomous Web Agent) model, Jace can use a browser to interact with websites [Details].

🔦 Weekly Spotlight

Let's reproduce GPT-2 (124M) - video tutorial by Andrej Karpathy [Link].

Consistent character: Create images of a given character in different poses [Link].

New Transformer architecture could enable powerful LLMs without GPUs [Link]

LaVague: an open-source Large Action Model framework to develop AI Web Agents [Link].

Build agents from scratch, using Llama 3 with tool calling (via Groq) and LangGraph [Video].

Private Cloud Compute: A new frontier for AI privacy in the cloud by Apple Security Research [Link].

🔍 🛠️ AI Toolbox: Product Picks of the Week

Peek: Automatically organizes and summarizes your browser tabs using AI

Wallpaper Al: A high-resolution wallpaper generator that uses AI to create wallpapers for smartphone or desktop.

Auto Designer 2.0: UI generator by Uizard. The Autodesigner 2.0 update introduces a conversational modality, allowing you to interact directly with Autodesigner to generate designs, add new elements, or modify any component or set of components with simple text prompts.

KREA: Generate and upscale images and videos. The new Video Enhancer is now open to everyone. You can generate videos with frame rates of up to 120fps.

Last week’s issue

Qwen2, Kling video model, Text to Sound Effects, No Language Left Behind model, video gaming AI assistant, Audio uploads and more

Hi. Welcome to this week's AI Brews for a concise roundup of the week's major developments in AI. In today’s issue (Issue #66 ): AI Pulse: Weekly News & Insights at a Glance AI Toolbox: Product Picks of the Week 🗞️🗞️ AI Pulse: Weekly News & Insights at a Glance

You can support my work via BuyMeaCoffee.

Thanks for reading and have a nice weekend! 🎉 Mariam.

I can't help but be fascinated by this issue. There are two pieces of news that particularly struck me: the first is the advances that have been made in the use of AI to better understand animal communication. It is a topic in which I am very interested, which intrigues me deeply but which, above all, I am seeing is becoming more and more linked - that of the animal world in general - to the study of AI, as if nature can inspire many mechanisms for technological innovation (once again) and these tools can really give us a better understanding of animals and what they accomplish and do; on the other hand, I think Sam Altman's startup with particular attention to AI-based house searches should be the subject of several analyses. In many countries, including Italy, house hunting is truly a hot topic, both in the search process but above all in the quality of available accommodation and so on. So an AI application that tries to start addressing this problem is definitely something to follow in general.